It’s funny how sometimes the smallest corner of the internet can give you a glimpse into much larger changes at work. My blog is tiny — so tiny, in fact, that I sometimes wonder if it even deserves to be called a “blog.” It’s more like a digital notebook, a space where I publish stray thoughts, reflections, and small experiments. I don’t optimize it for search engines. I don’t run ads. I don’t promote it on social media. It exists almost in defiance of the hyper-optimized web: a quiet island in a stormy sea.

And yet, even here, in this quiet place, I’ve noticed something remarkable happening.

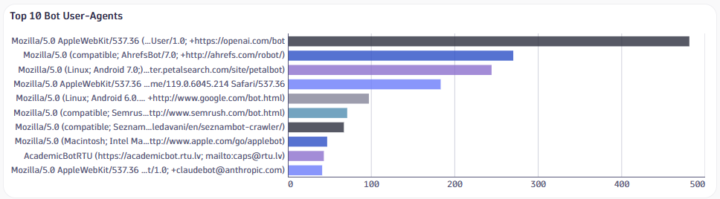

Over the past few months, I started peeking into the traffic patterns of my blog with a simple Dynatrace DQL query, mostly out of curiosity. I expected to see a trickle of human visitors — friends, maybe a random stranger or two, possibly someone who stumbled across an article by accident. What I didn’t expect was this: about half of my “readers” aren’t people at all. They’re bots.

And not just the old-fashioned kind of bots like the classic Google crawler. These are AI bots. My statistics show OpenAI leading the rankings by far, with Google’s own crawler showing up, and Anthropic’s AI bot making an appearance as well.

At first, I laughed. Who knew that my little blog, obscure and unpolished as it is, would end up with OpenAI and Anthropic as its most loyal readers? But the more I thought about it, the more it struck me: this small statistical sample is telling a story about the changing nature of the web. We’re moving from a human-centered internet — where the point of publishing was to reach other people — to an AI-centered one, where machines are often the primary audience.

And maybe that matters more than we think.

How I Discovered the Bot Half of My Audience

Let me start with the technical side, because it’s part of the story. I use Dynatrace to monitor some of my hobby projects, and one day I decided to run a simple query against the logs of my blog. Nothing fancy — just a DQL query to group visitors by user agent and count how many visits came from each. Then I put it on a little dashboard for fun.

The result was… surprising.

Instead of the quiet, human-scale traffic I imagined, the graph lit up with bot activity. About half of the total requests were clearly identifiable as bot-driven. OpenAI bots were at the top of the list, comfortably ahead of the others. Google’s crawler came in as expected, and Anthropic’s bot showed up too.

At first, I thought maybe my filter was wrong. Maybe I was accidentally counting automated pings or health checks. But the pattern held steady: bots really were accounting for about half of the visits.

I’m not running a content farm. I don’t publish SEO-optimized articles with juicy keywords that would attract hungry crawlers. My blog is about as far as you can get from clickbait. And yet, here were some of the biggest AI companies in the world, paying me a visit, over and over again.

The Changing Nature of “Search”

When I first started blogging, the only bot you really thought about was Googlebot. The whole game was: write something, hope Google indexed it, and then maybe — just maybe — someone searching for the right keyword might find it. Search engines were the middlemen between writers and readers.

Now, the middlemen have changed.

When OpenAI or Anthropic crawl my blog, they’re not just indexing it for a search engine in the old sense. They’re feeding it into large language models, systems that don’t just point users to my page, but actively reinterpret and repackage my content. A user might never see my blog at all. Instead, they’ll see a synthetic answer — a paraphrase, a summary, a blend of my words with thousands of others.

That’s a massive shift. The “reader” of my blog is no longer a human typing a query into Google. It’s an AI model, scanning and absorbing information to later spit back in a conversational answer box.

It’s like hosting a dinner party and realizing that half your guests are robots who won’t eat the food but will later serve it, reheated and repackaged, at someone else’s table.

My Blog as a Microcosm

Now, I know my blog is just one tiny data point in the massive web. But that’s what makes it so interesting. If even a small, private site like mine is seeing half of its visitors come from AI bots, what does that say about the broader web?

It suggests that the balance has already tipped. The majority of “reading” happening online may not be human at all. It’s automated systems collecting, parsing, and embedding text into vectors. Human visits might be the exception rather than the rule.

In the old days, when you said “my blog gets 1,000 visits a month,” you meant people. Now, you might have to clarify: “1,000 visits, but only 500 from humans.” The rest are invisible machine readers.

That shift in itself is worth pausing over. It’s not just about traffic statistics. It’s about the audience for which we are now writing.

Writing for Machines (Even When We Pretend Not To)

If I’m honest, I’ve already been writing for machines without realizing it. We all have. Think of SEO — a whole industry devoted to shaping content so that Google’s algorithms would favor it. Writers learned to sprinkle in keywords, structure their headlines just so, and avoid certain pitfalls that would bury their posts on page 5 of the results.

That was the first phase of writing for machines.

Now, with AI bots, the stakes are different. I’m no longer writing to be ranked and linked; I’m writing to be digested. The machine doesn’t just point to my words; it absorbs them. It learns patterns, facts, and phrasings from what I write. My blog is not a resource for humans directly; it’s a resource for models that humans interact with later.

It raises a strange question: do I change how I write, knowing that OpenAI or Anthropic might be my largest audience? Should I tailor my posts not just to the handful of human readers who stumble across them, but also to the AI systems that will quote, paraphrase, and synthesize them?

It feels absurd, but maybe that’s where we’re headed.

The Invisible Conversations

Here’s the irony: people might already be “reading” my blog without ever knowing it.

Imagine someone asks ChatGPT a question that overlaps with a thought I once published. The answer might include a paraphrased echo of my words — stripped of context, remixed with other sources. That person will never know it came from me. They’ll never visit my site. They’ll never leave a comment or send an email.

It’s like my blog is having invisible conversations behind my back. My words live a second life inside machines, detached from the small, human-scale community I imagined when I hit “publish.”

That makes me feel oddly ghostlike: present and absent at the same time.

Bots as the New Gatekeepers

Google once reshaped the internet by making itself the gatekeeper of discovery. If you wanted to be found, you played by Google’s rules.

Now, AI bots are becoming the new gatekeepers. But they don’t just control discovery; they control interpretation. They decide not only whether my blog is relevant, but how it is summarized, rephrased, and presented.

In a sense, they’ve turned the web into raw material. My blog is no longer a finished product to be read on its own terms. It’s clay for generative systems to mold into something else.

That’s a profound shift in power. And it raises uncomfortable questions:

- What happens to attribution in a world where machines blend sources invisibly?

- What happens to the incentive to publish, if human readers never arrive?

- What happens to the diversity of voices, if AI systems filter and homogenize everything into a uniform tone?

Why My Tiny Blog Matters (At Least to Me)

Some might say: who cares? It’s just a blog. Of course bots visit. That’s the background noise of the internet.

But I think small blogs like mine are canaries in the coal mine. If even at this level half the traffic is machine-driven, it shows just how thoroughly the web has been transformed.

It also makes me reflect on why I write in the first place. Am I writing for people? For myself? Or, strangely enough, for machines?

There’s a paradox here: the bots will probably always be my most reliable readers. They never skip an update. They never get bored. They never unsubscribe. In some ways, they’re the most loyal audience I’ve ever had.

And yet, they’re also the least satisfying. They don’t laugh at my jokes. They don’t challenge my ideas. They don’t send me an email saying, “Hey, I read your post and it really made me think.”

They consume without conversation.

A World Where Bots Outnumber Us

If my blog is 50% bots and 50% humans, it might already be ahead of the curve. I suspect many larger sites are seeing an even higher proportion of automated traffic. It wouldn’t surprise me if, in a few years, bots make up the overwhelming majority of pageviews across the web.

That raises the question: what does the web become when it’s primarily bots talking to bots?

We might already be there. Models scrape blogs, learn from them, and then answer human questions. Humans ask questions, which trigger new crawls, which feed back into the system. It’s a feedback loop where human presence is the spark, but the bulk of the “conversation” is machine-to-machine.

The web, once imagined as a public square for humans, is increasingly becoming a training ground for AI.

Conclusion: The Blog I Never Meant to Write for Bots

When I started this blog, I thought of it as a way to share thoughts with a handful of curious humans. Now, I realize it’s also a resource for AI bots — and maybe, in terms of traffic, primarily for them.

That realization is both unsettling and oddly poetic. On one hand, it feels like a loss: the intimacy of the old web, where you wrote for people and people wrote back, is fading. On the other hand, it feels like a strange honor: my words are floating in the bloodstream of new technologies, living a second life in conversations I’ll never hear.

Maybe that’s the new reality of writing online: you’re always writing for two audiences at once. The visible humans who might stumble across your post, and the invisible machines who are definitely reading it, digesting it, and carrying pieces of it into the future.

And so here I am, staring at my Dynatrace dashboard, smiling at the absurdity of it all. My tiny, private blog has become half-human, half-machine. A microcosm of the web itself.

Who knew the bots would be my biggest fans?